Artificial intelligence (AI) is revolutionizing Learning Management Systems (LMS), transforming static e-learning into dynamic, tailored experiences. Whether you’re enhancing learner engagement, automating assessments, or gaining actionable insights through analytics, integrating AI into your LMS offers immense potential. Yet, as powerful as AI is, implementing it comes with significant challenges—technical, organizational, ethical, and cultural.

In this deep dive, we’ll explore these challenges and provide practical solutions. We’ll cover infrastructure, data management, user adoption, compliance, instructional design, and more—all aimed at helping you deploy AI in your LMS with confidence and clarity.

1. Selecting the Right AI Use Cases

The Mistake of AI for AI’s Sake

Many projects rush to AI features without identifying real pain points. For example, implementing chatbots without demand can create unnecessary complexity and invite learner frustration.

Focus on Strategic, High-Impact Use Cases

Prioritize by:

- Learner needs (e.g., personalized recommendations, real-time feedback)

- Educator challenges (e.g., grading, analytics)

- Business metrics (e.g., completion rates, time-to-skill)

Role-Based Solution Mapping

- Learners: Chatbots, adaptive paths, intelligent assistance

- Trainers: Automated scoring, content generation, analytics dashboards

- Administrators: Compliance alerts, predictive retention tools

By tying AI features to real objectives—like 10% reduction in dropout rate—you’ll ensure relevance and ROI.

2. Data Strategy & Quality Management

AI runs on data. Without proper data architecture, your AI initiative will sputter.

Data Sources

- LMS usage logs

- Assessment results

- Forum interactions

- Employee profiles and job metadata

The Data Quality Challenge

Common pitfalls include:

- Incomplete logs (missing click data, video views)

- Inconsistent formatting (date/time fields, name formats)

- Missing demographics (role, cohort, region)

Building a Clean Data Pipeline

- Data Extraction: Use APIs like xAPI or SCORM to extract logs

- Data Lake / Warehouse: Store raw and processed data centrally

- ETL and Cleansing: Normalize, dedupe, and validate data

- Metadata tagging: Add context—learner group, content type, learning objective

Ensuring Ongoing Infection

Establish data update schedules, automated tests, and version control.

3. Architecture & Infrastructure

In-LMS vs. External AI Services

You can embed AI logic directly in your LMS or connect to external AI platforms:

- Internal AI: Better speed, full control, but requires ML expertise

- External AI: Easier setup, scalable services, limited customization

Cloud vs. On-Premises

- Cloud: Flexibility, scalability, but watch out for cost escalation

- On-Premises: Stronger data control, higher maintenance

Real-Time vs. Batch Operations

- Real-time: Ideal for chatbots or live adaptive assessments

- Batch: Weekly analytics run for learner risk scores or reporting

4. Model Development & Maintenance

Choosing the Right Models

For AI-powered LMS, common models include:

- Classification for dropout risk

- Regression for predicting completion timeframe

- Recommendation engines for content suggestions

Hands-On vs. AutoML

- Custom Models: Great for precise needs and data control

- AutoML Tools: Help build models quickly without data science resources

Training & Retraining

- Gather labeled data (e.g., past dropouts, course matches)

- Split into training/test/test datasets

- Retrain periodically to avoid “model drift”

Explainable AI

Use models like decision trees or SHAP analysis to justify predictions. Explainability is key for compliance and learner trust.

5. Privacy, Ethics & Consent Management

GDPR, FERPA & Other Regulations

- Identify personal data (e.g., behavior logs)

- Only collect essential data

- Implement transparent consent mechanisms

Anonymization & Pseudonymization

Remove PII for dashboards or share only anonymized data.

Fairness & Bias

- Audit models across gender, ethnicity, learning style

- Monitor for unintended bias

- Adjust models and data if needed

User Transparency

- Disclose that AI is active: “Our system may recommend content based on your performance.”

- Provide opt-out where possible

6. Integration & UX Design

Embedded vs. Widget-Based

- Embedded: More seamless UX but risk clutter

- Widget (e.g., chatbot pop-up): Easier to deploy modular features

UI / Conversation Design

Design chatbots with clear greeting, help commands, and fallback responses. Keep copy human-friendly.

Feedback Loops

Allow learners and instructors to rate AI recommendations or opt out of suggestions. Use this data to improve models.

7. Educator & Administrative Adoption

Training & Transparency

Offer workshops and handouts explaining:

- What AI does

- Why it helps

- How it respects privacy and supports roles

Role-Based Access

Instructors see analytics dashboards; admins see global reports; learners see personalized suggestions.

Incentives & KPIs

Include AI feature adoption in KPIs (e.g., “use chatbot tips before deadlines”) to drive usage.

8. Monitoring, Evaluation & Iteration

Key Metrics

Track:

- Feature usage (chatbot sessions, recommendations clicked)

- Impact (dropout rate, completion time, scores)

- User feedback (survey ratings)

Regular Review Cadence

- Weekly: Feature adoption

- Monthly: Performance impact

- Quarterly: Model audits for bias, drift, fairness

Rapid Iteration

Adopt “fail fast” cycles for new features like adaptive quizzes or micro-course suggestions.

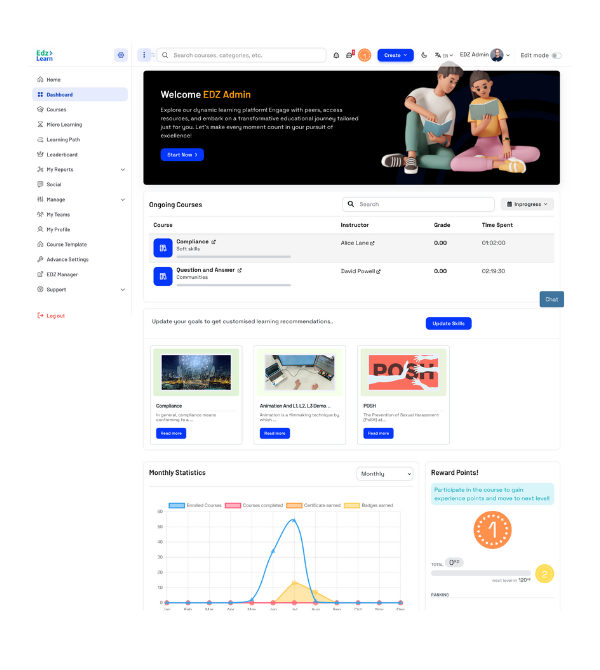

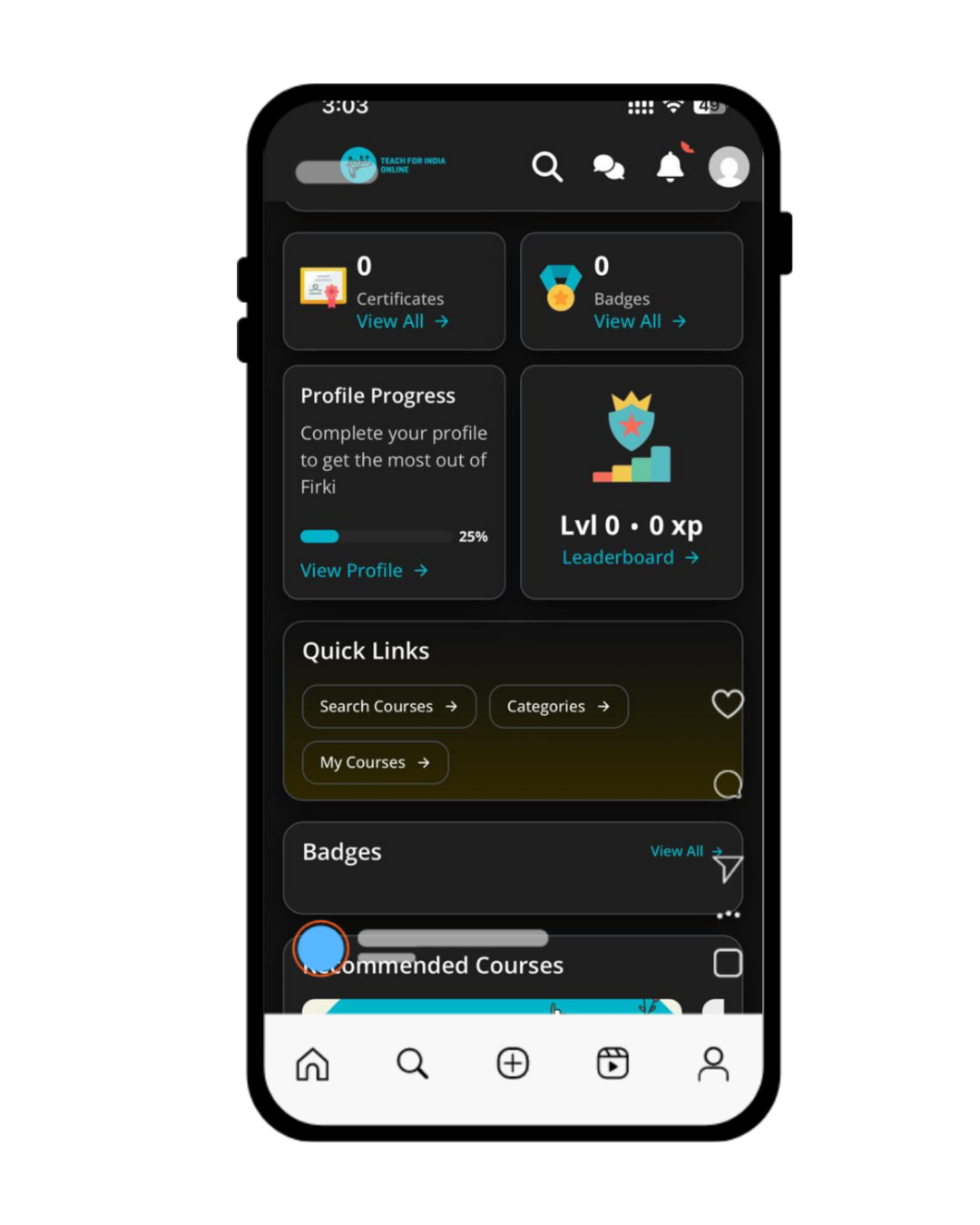

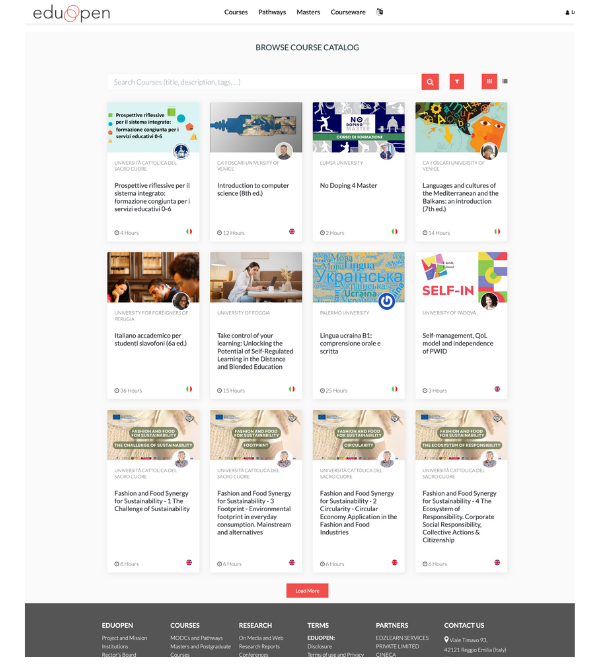

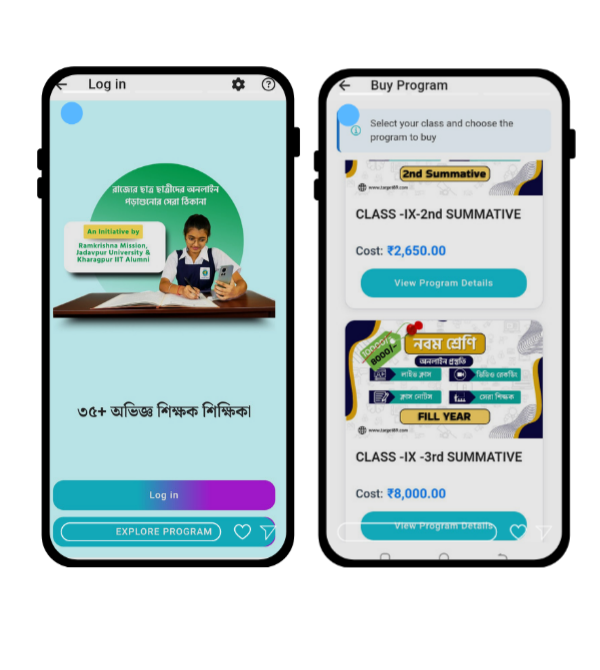

9. Real-World Example: EdzLMS AI Rollout

Consider a rollout in a fictitious organization:

- Phase 1: Pilot — Launch chatbot in 2 courses; verify data quality and measure adoption

- Phase 2: Analytics — Add predictive dropout model and cascade instructor nudges

- Phase 3: Content Personalization — Introduce recommendation module; analyze impacts

- Phase 4: Generative AI — Use auto-generated summaries and quizzes

- Phase 5: Scale & Govern — Roll out across LMS and implement model governance and compliance controls

Within 12 months, the organization saw:

- 35% reduction in dropouts

- 20% faster time to competency

- 40% fewer support tickets

- 85% user satisfaction with AI features

10. Future Trends

Emotion & sentiment-aware systems using NLP voice cues

- Voice-based tutoring agents responding via audio

- Multimodal learning signals: facial, clickstream, text combined

- Decentralized AI via federated learning for strong privacy

- Co-learning models embedding live instructors into AI enhancements

Conclusion

Implementing AI effectively in your LMS is not magic—it’s a strategic journey that requires planning, data discipline, and ethical foresight. When done right, it transforms learning: shifting from static courses to dynamic, personalized, and human-centric experiences.

From the start, align your AI strategy with business and learning goals, build the data foundation for effective models, choose the right architecture, and prioritize transparency and fairness. Carefully design UX and educator onboarding to ensure adoption, monitor impact through data and feedback, and iterate quickly.

Ultimately, AI should serve people—not replace them. A modern, responsible LMS is where human instructors and AI agents collaborate to guide learners toward success. Implemented thoughtfully, AI in your LMS becomes a partner in education—empowering engagement, enhancing results, and unlocking new growth at scale.