Ethics and Privacy in AI-Based E-learning Systems

When we implement AI-driven learning platforms, the promise is huge: personalization, real-time feedback, smarter adaptive paths. But with that comes a big responsibility. This article explores how to handle ethics and privacy in AI-based e-learning systems, so your learners are protected—and your organisation stays on solid ground.

Why Ethics and Privacy Matter in AI Learning

AI systems in learning environments collect and analyse large amounts of data. This can include quiz results, time spent on tasks, even behavioural patterns. Without clear rules, that data may be misused or trust may be lost.

For example, if learner data is used to make decisions without transparency, biased outcomes can creep in.

Also, privacy breaches can expose sensitive learner details, weakening the relationship between educator and learner.

Ethics and privacy are not just “nice-to-have” — they are foundational if your e-learning system is to be trusted and effective.

Key Principles for an Ethical, Private AI-Based E-learning System

1. Introduction: The Promise & the Perils

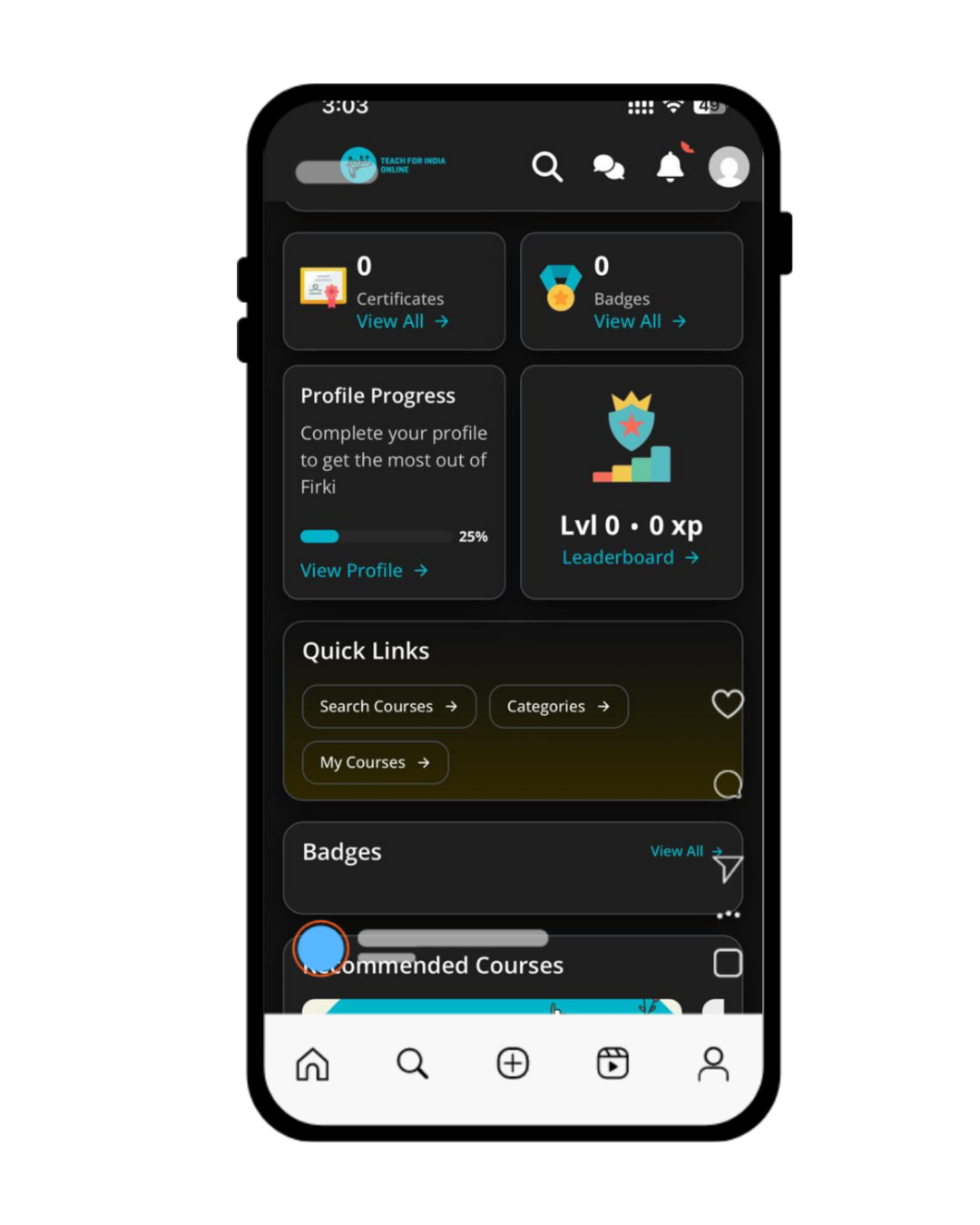

AI-powered e-learning platforms offer excitement—personalized pathways, real-time feedback, tailored content, and intelligent tutoring all improve learner outcomes. But these capabilities come with a responsibility: AI systems thrive on personal data, and with that comes important ethical questions around privacy, equity, data security, and transparency.

As digital learning grows, so does public scrutiny of how learner information is used. Any organization deploying AI must balance innovation with responsibility—ensuring learners are supported, but never exposed to risk, manipulation, or unfair judgments.

In this deep dive, we’ll explore five key dimensions of ethics and privacy in AI-based e-learning, with practical guidelines and strategies for responsible deployment.

2. Learner Data: Collection, Consent & Minimization

Why It Matters

AI needs data—clicks, quiz scores, screen time, discussion activity—but more data doesn’t mean better insights. Collecting too much violates trust and increases risk.

Best Practices

- Purpose limitation: Only collect data strictly needed for the feature (e.g. performance logs for adaptive modules, not browsing history).

- Informed consent: Clearly explain what’s collected, why it matters, and how it’ll be used—and let learners opt out if they wish.

- Data minimization: Discard outdated or irrelevant data. Don’t hang onto voice logs or emotional scores indefinitely.

3. Data Security & Governance

The Stakes

In a breach, personal learning data can expose student behavior, mental health trends, or performance issues. This must never happen.

Safeguards to Look For

- Encryption: Data should be secured both in-transit and at rest.

- Secure access: Use strong authentication, set role‑based controls, and whitelist APIs.

- Audit logs: Maintain records of who accessed data and when.

- Governance policies: Define data ownership (institution vs learner), retention periods, and deletion processes.

4. Fairness & Bias in Algorithms

Common Pitfalls

AI may unintentionally disadvantage learners based on gender, cultural background, or learning style. For example:

- Difficulty models built on English speakers may misclassify proficiency of non-native learners.

- Predictive models may over-identify beginners as “at risk” while ignoring systemic barriers.

Mitigation Strategies

- Use diverse training datasets: Incorporate learners from all demographics.

- Test for fairness metrics: Evaluate false positives/negatives across different groups.

- Explainable AI: Choose interpretable models or techniques like SHAP to explain predictions.

- Regular audits: Ensure equity by reviewing performance across racial, linguistic, or gender lines.

5. Transparency & Accountability

Why Learners Need This

Learners must understand how AI affects them—so they can take control and trust the system.

Transparency Tactics

- Model disclosure: Clearly state when AI is used, for what purpose, and at what point in the learner journey.

- Reason summaries: For instance, “You’ve watched only 3 of 10 videos; we recommend a review session.”

- Feedback channels: Allow users to challenge decisions, correct errors, or request human review.

6. Autonomy, Informed Consent & Replayability

AI-generated recommendations shouldn’t replace learner agency.

- Respect learner choice: Always allow opting out of AI features and selecting manual learning paths.

- Undo or reset: Let learners override system-recommended routes.

- Replay access: Ensure learners can see past recommendations or feedback to reflect on progress.

7. Privacy in AI-Driven Assessments & Monitoring

Automated exam supervision (e.g. AI proctoring, webcam monitoring, keystroke analysis) can border on surveillance.

Risks

- Unfair flags due to false positives

- Sensitive data collection (face, voice) without justification

- Legal concerns in different regions

Ethical Protocols

- Proportionality: Only log information needed for academic integrity.

- Transparency: Inform learners which data is captured and why.

- Human oversight: Ensure every flagged issue is reviewed by a person.

- Privacy-friendly tech: Use privacy-preserving ML such as on-device processing or anonymized signals.

8. Data Retention, Deletion & Portability

Learner Rights

Many privacy laws (GDPR, CCPA, India’s DPDP) give learners rights to:

- Review their data

- Erase it

- Download it or transfer it elsewhere

Platform Requirements

- Right to be forgotten: Implement secure data deletion on request.

- Downloadable records: Allow learners to export transcripts, feedback, and progress at any time.

- Portability tools: Let them move their learning profile to other platforms.

9. Third-Party Integrations & AI Services

AI integrations often rely on external APIs or vendors.

Hidden Concerns

- Unknown data flows to third parties

- Varying security standards

- Vendor lock-in or lack of transparency

Mitigation Steps

- Vendor due diligence: Ask for security audits, privacy impact assessments, and compliance certifications.

- Data contracts: Clearly define access, usage, and retention policies.

- Continuous monitoring: Periodically check third parties for compliance and performance.

10. Ethical Leadership & Operational Culture

Technology alone isn’t enough—ethical AI needs a supportive culture.

Training & Awareness

- Educate educators and instructors about AI trust, bias, and privacy

- Train tech teams to prioritize data ethics alongside accuracy

Governance Structures

- Establish an AI ethics committee with diverse stakeholders

- Define formal roles: data steward, model owner, privacy officer

- Conduct Bias Impact Assessments before launching AI features

11. Real-World Examples: Learning from Industry

Example 1: Voice-Based Adaptive Tutoring

A platform uses voice to quiz learners. They store only text transcriptions with anonymized IDs. Voice audio is processed locally and discarded. Learners receive summaries with timestamps—but the voice files never leave the device.

Example 2: Fairness Checks in Coding Platforms

A coding LMS masked user identity and source before passing challenges to AI graders. Performance was analyzed across groups to ensure the AI wasn’t biased—no programmer was disadvantaged based on coding style.

Example 3: Legitimate Proctoring Practices

In a remote university exam, low-resolution screen captures were used instead of video, preserving privacy while enabling integrity. Students were informed, and all flags required human verification.

12. Practical Checklist for Ethical & Privacy-Compliant AI Deployment

- Identify core learner data requirements

- Create clearly worded informed consent

- Architect for minimal data retention

- Encrypt and anonymize data end-to-end

- Audit for algorithmic bias early & often

- Provide transparency and learner control

- Build deletion/export tools for learner data

- Vet all third-party AI suppliers

- Educate your team on data ethics

- Establish an ongoing AI ethics governance board

13. Future Outlook: A Human-Centric AI Vision

Ethical standards are evolving:

- Federated learning models allow privacy by keeping data local

- Privacy-preserving NLP enables features like real-time feedback without saving transcripts

- Consent-as-a-service platforms offer on-demand informed consent and preference control

Forward-thinking organizations will prioritize transparency, equity, and learner autonomy—not just to comply, but to build trustworthy, human-first AI.

14. Conclusion: Ethics as the Cornerstone of EdTech

AI offers powerful opportunities—AI tutors, predictive guidance, real-time assessments, and more. The question is how thoughtfully we implement them. Without a firm ethical foundation, these innovations risk eroding trust, privacy, and fairness.By acting as responsible stewards—collecting data judiciously, ensuring security, monitoring bias, and empowering learner autonomy—we don’t just leverage technology; we build platforms that respect and uplift learners. Ethics and privacy aren’t obstacles to innovation—they are the backbone of genuinely transformative, human-centric e-learning.