Introduction:

In recent years, large language models have revolutionized the landscape of Artificial Intelligence and Natural

Language Processing. These sophisticated AI systems, such as OpenAI’s GPT (Generative Pre-trained Transformer)

series, have garnered significant attention for their ability to understand and generate human-like text. They

represent a culmination of advances in machine learning, particularly in the field of deep learning and neural

networks.

At their core, large language models are designed to process and generate human language. Unlike earlier language

models that were limited by smaller data sets and less complex architectures, today’s large language models

leverage vast amounts of data and powerful computing infrastructure to achieve unprecedented levels of

performance. This evolution has been made possible by breakthroughs in both hardware capabilities, such as the

development of specialized processing units like GPUs and TPUs, and software innovations in algorithm design and

training methodologies. Here are some key characteristics of LLM :

• Contextual Understanding: LLMs can comprehend and generate text based on the context provided by surrounding words or sentences.

• Generative Capability: They can generate coherent and contextually relevant text, making them suitable for creative and practical applications.

• Versatility: They excel in a wide range of natural language processing tasks without task-specific fine-tuning.

• Scalability: LLMs can handle large datasets and computational demands, enabling robust performance on diverse tasks.

• Transfer Learning: LLMs leverage pre-training on vast amounts of data, allowing efficient adaptation to specific tasks through fine-tuning.

Types of LLMs:

There are several types of Large Language Models (LLMs), each designed with specific architectures and objectives in mind. Here we mention two main types:

• Autoregressive Models: These models generate text one token at a time based on the previously generated tokens. Examples include OpenAI’s GPT series and Google’s BERT.

• Conditional Generative Models: These models generate text conditioned on some input, such as a prompt or context. They are often used in applications like text completion and text generation with specific attributes or styles.

Application of LLMs:

The versatility of LLMs has led to their deployment in various applications, including:

- Translation: These models excel at translating text between languages, often with higher accuracy and fluency compared to traditional translation tools.

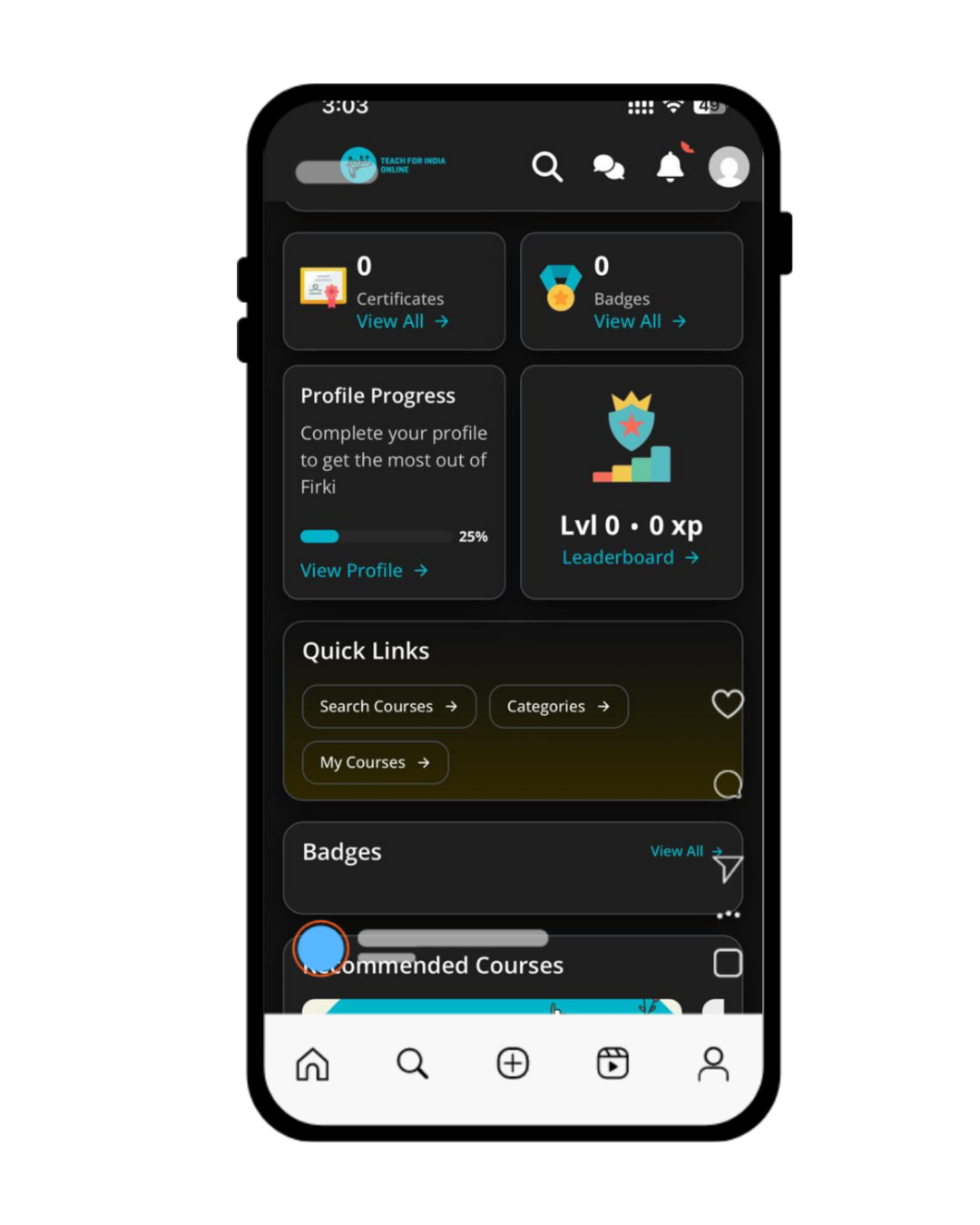

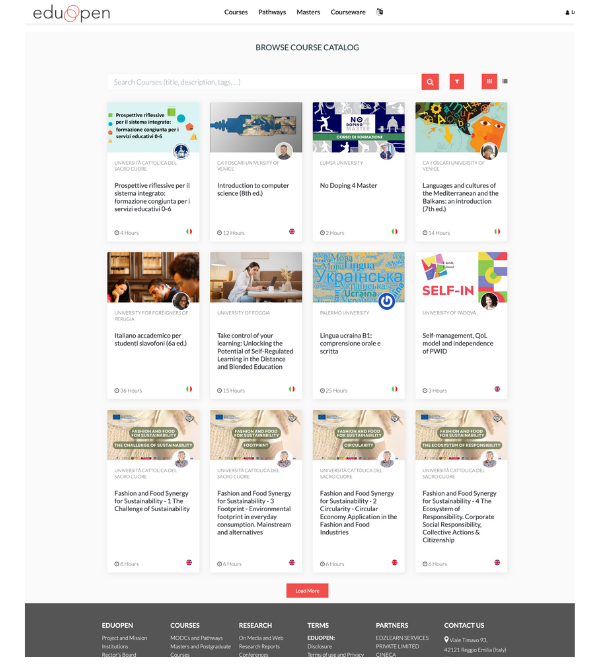

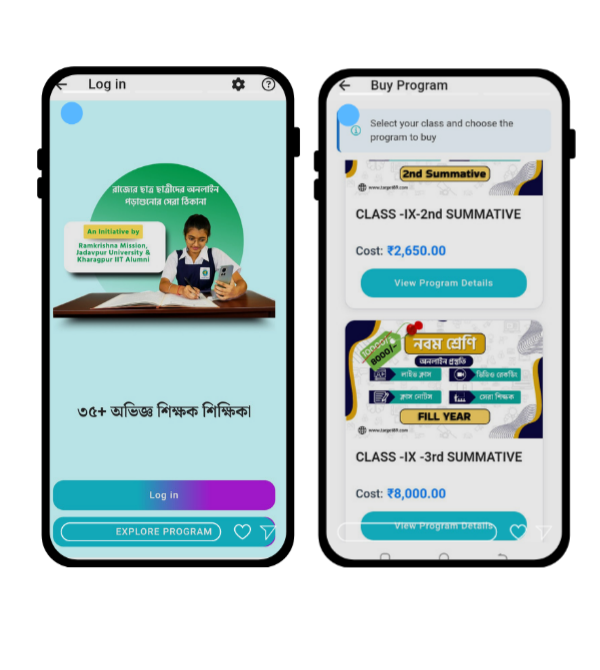

- Education: They are transforming education by enabling personalized learning experiences and generating educational content.

- Customer Support: Many companies use LLMs to build virtual assistants and chatbots that can handle customer queries and provide support autonomously.

- Healthcare: LLMs are used in the medical field for tasks like summarizing clinical notes, generating reports, and even assisting in diagnosis.

How LLM Works:

At the core of LLMs lies the transformer architecture, unlike previous models, transformers handle long-range dependencies and context in text more efficiently, allowing them to generate more coherent and contextually relevant outputs.

- Transformers: The transformer model operates using an attention mechanism that weighs the importance of each word in a sentence relative to others, enabling the model to focus on relevant parts of the input.

- Attention Mechanism: This mechanism allows the model to consider all words in a sequence simultaneously, rather than sequentially as in traditional models. This parallel processing ability greatly enhances performance and scalability.

- Pre-training Objective: LLMs are pre-trained on large-scale text corpora using an unsupervised learning objective. The most common pre-training tasks include:

• Masked Language Modeling (MLM): A percentage of input tokens are randomly masked, and the

model is trained to predict the masked tokens based on the context provided by the other tokens.

• Next Sentence Prediction (NSP): The model is trained to predict whether a sentence follows another sentence in a given text corpus. This helps the model learn relationships between sentences. - Training Data: LLMs require vast amounts of text data for pre-training. Common sources include books, websites, articles, and other text-rich sources from the internet. The larger and more diverse the dataset, the better the model typically performs.

- Architecture Layer:

• Input Embeddings: Words are first converted into numerical representations (word embeddings) that capture semantic meanings.

• Transformer Block: These blocks contain multiple layers of self-attention mechanisms and feed-

forward neural networks. Each block refines the model’s understanding of the input text.

• Output Layer: Typically, a linear transformation followed by a softmax activation is used for generating probabilities of the next token in MLM or for classification tasks. - Fine-Tuning: After pre-training on large datasets, LLMs can be fine-tuned on specific downstream tasks such as text classification, named entity recognition, question answering, etc. Fine-tuning adapts the pre-trained model to perform well on specific tasks by adjusting the parameters slightly during task-specific training.

- Deployment: Once fine-tuned, the LLM can be deployed to perform various NLP tasks in real-world applications. The deployment can be on cloud platforms, edge devices, or integrated into larger software systems depending on the requirements.

Challenges and Ethical Consideration:

Despite their capabilities, LLMs present several challenges:

• Misinformation: Their ability to generate convincing text raises concerns about the potential spread of misinformation and fake news.

• Privacy Concerns: Handling large datasets raises issues regarding data privacy and the potential for unintended leakage of sensitive information.

• Resource Intensity: Training and deploying LLMs require significant computational resources and energy, which can be a barrier for many organizations.

• Interpretability: Understanding the decisions made by LLMs can be challenging, making it difficult to ensure transparency and accountability.